Adding Non-kinetic Models to VR-Forces

by Pete Swan, Director of International Business

It was my great privilege and pleasure to present at the recent NATO CA2X2 Forum in Rome.

My presentation was about a proof of concept that MAK’s Principal Engineer, Dr. Doug Reece, recently conducted to investigate whether VR-Forces could be used to create non-kinetic models and integrate them into a kinetic simulation environment.

VR-Forces has always been excellent at modeling the physical dimension where people and vehicles move around, interact with the terrain, and engage each other, but could it be used to model the informational and cognitive domains?

It turns out, it can!

VR-Forces includes a set of simulation infrastructure services that can equally be used for both kinetic and non-kinetic models. These services include the synthetic environment (terrain, weather, ocean), scenario authoring, execution and control, 2D and 3D visualization, data capture, and distributed simulation interoperation.

Objects are the key building blocks of the VR-Forces simulation engine. Objects are composed of systems of components and are totally configurable through the parameter database and state variables. The behavior of objects can be programmed by a developer (in a plugin) or created by a user using plans or scripts.

You can define new kinds of objects and give them behaviors, states, resources, and capabilities. These objects could be individual soldiers, vehicles or units; or, for example, it could be a physical building representing a command center or hospital, or an area represented by lines on a map, such as a population area.

VR-Forces already includes informational models such as EW and communications, so our focus was on the cognitive dimension.

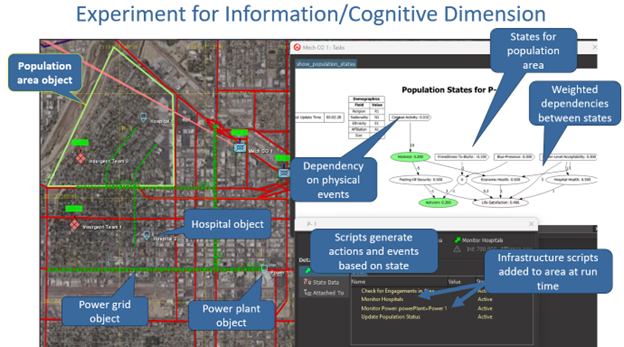

The vision of the proof of concept was to create a simulation centered on a local civilian population and how changes in the infrastructure impact it. Doug therefore created new types of objects in VR-Forces representing local population and infrastructure; added state variables to reflect their state relevant to the cognitive dimension; and created new computational models that modified the state and specified the relationships between cognitive variables and the physical world—all within the existing VR-Forces infrastructure.

The power grid was created from a set of lines defined in a shape file, i.e. standard format feature data. The population area is configured with scripts for each type of infrastructure that is modeled and relevant (e.g. “Monitor Power”).

Each such infrastructure script causes the population area to find the infrastructure within its boundaries. The script also adds the infrastructure state to the cognitive model for the population area.

Each infrastructure object computes its own state from simulation events.

All of the GUI features shown here– map, map graphics, task information for population area, graphical display of script state—use existing VR-Forces capabilities. No new code (other than scripts) was created for this experiment.

The experiment showed that it is feasible to extend VR-Forces into the information and cognitive dimensions. Taskable graphic objects provide the means to attach models to elements in the information and cognitive dimensions (e.g., populations, power grids). The Behavior Engine can be used to develop standard computational models and simplify the coding of dynamic systems. Models in all three dimensions can run together in a common simulation. Implementation within the existing VR-Forces framework means that the rich simulation infrastructure is available to all dimensions.

I’ve always known that VR-Forces is flexible, but until this exercise, I simply didn’t realize just how flexible. It can be used for a multitude of modeling applications – it just takes imagination, thought, and careful design.

I’m looking forward to I/ITSEC coming up soon – if you’d like to see a demo of VR-Forces or any of the MAK ONE suite of products, fill out the form below so we can get connected at the show!